Put society in the loop

The term Human-in-the-loop (HITL) describes a computational process—e.g. a flight simulator—that includes interaction with a human—e.g. a human pilot in training. When it comes to AI, putting humans in the loop can fulfill multiple objectives. First, humans can generate realistic data to train AI algorithms. Second, humans can identify mistakes in the AI’s predictions and classifications. Thirdly, a human can exercise supervisory control, by spotting and correcting AI misbehavior. Finally, the human is an accountable entity that can be held responsible in case the system misbehaves.

While HITL is a useful paradigm for overseeing AI systems, it does not sufficiently emphasize the role of society as a whole in such oversight. In a HITL AI system, there is a well-understood and agreed-upon goal—e.g. everyone on an airplane agrees on the goal of arriving safely at the destination, and the human pilot is in the loop just in case the autopilot fails.

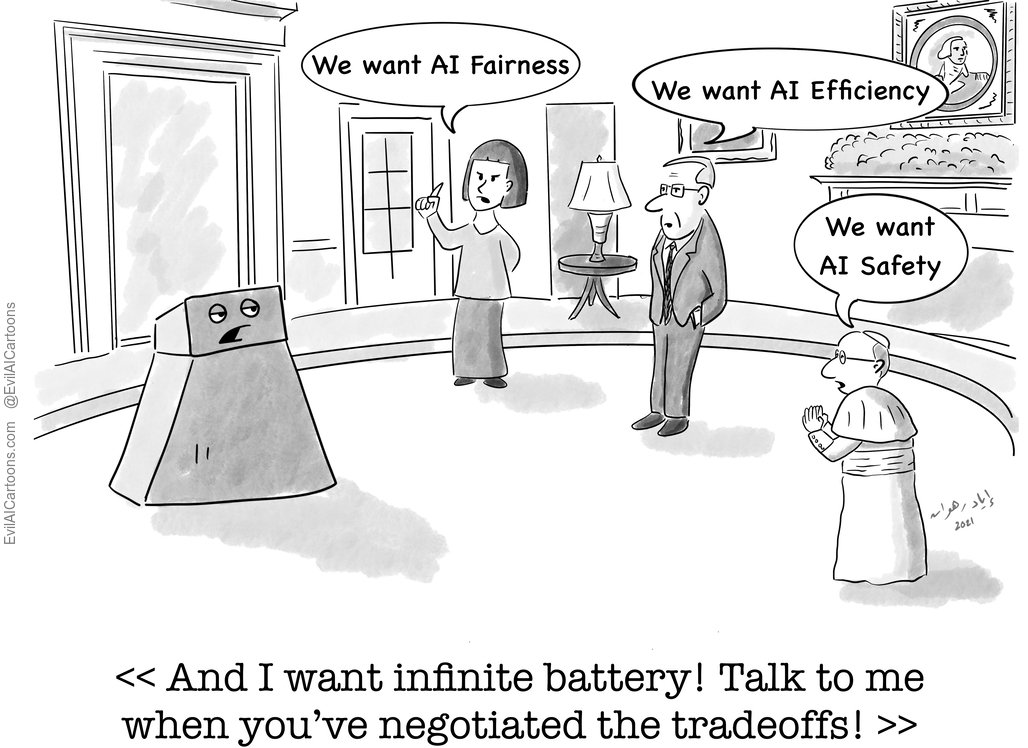

But when it comes to broader societal oversight, people do not agree on what the goal is! Or more accurately, they do not agree on how to prioritize different goals: should we increase system efficiency at the expense of slightly reducing safety? Should the AIs maximize societal fairness, even if that reduces overall wealth? These are fundamental social and economic questions, typically tackled through politics. That is, we need to agree, among ourselves, about what the social contract is. This ‘algorithmic’ social contract then manifests itself in the AI’s programming, under our continued oversight.

Put another way, to ensure society’s oversight over AI, we need to move from HILT to what I call Society-in-the-loop (SITL) defined as follows:

Society-in-the-loop = Human-in-the-loop + Social contract

That is, putting society in the loop is a combination of having human oversight in the decision-making loop of AI systems, in addition to a negotiation of a social contract among citizens about which values should actually guide such oversight.

References

Monarch, R. Human-in-the-Loop Machine Learning. (Manning Publications, 2021).

Holzinger, A. Interactive machine learning for health informatics: when do we need the human-in-the-loop? Brain Inform 3, 119–131 (2016).

Sheridan, T. B. Supervisory control. Handbook of Human Factors and Ergonomics, Third Edition 1025–1052 (2006).

Rahwan, I. Society-in-the-loop: programming the algorithmic social contract. Ethics Inf. Technol. (2017) doi:10.1007/s10676-017-9430-8.