The Ethical Opt-Out Problem in AI

Recall that a social dilemma is a situation in which people, acting in their own self interest, brings about an outcome that is worse for everyone. A classic example of a social dilemma is the ‘Tragedy of the Commons.’ A bunch of farmers share a common land for their sheep to graze. Let’s suppose that each farmer brings ten sheep. This rate of grazing allows the land to rejuvenate and the grass to regenerate at a sustainable rate. Then, one farmer thinks: if I bring one extra sheep, I’ll make more money on wool and milk, and no one else suffers. Indeed, nothing changes if one extra sheep is introduced. But if every farmer thought this way, and brought an extra sheep, there would be too many sheep, and the grass would fail to regenerate. The commons would become arid, to the detriment of all farmers, not to mention the sheep, who would probably all get sent to the slaughter house.

Social dilemmas are traditionally solved through regulation. For example, the government may impose a limit on the number of sheep each farmer is allowed to bring, or may levy an increasing tax on each additional sheep. The same principle is beyond carbon caps and taxes.

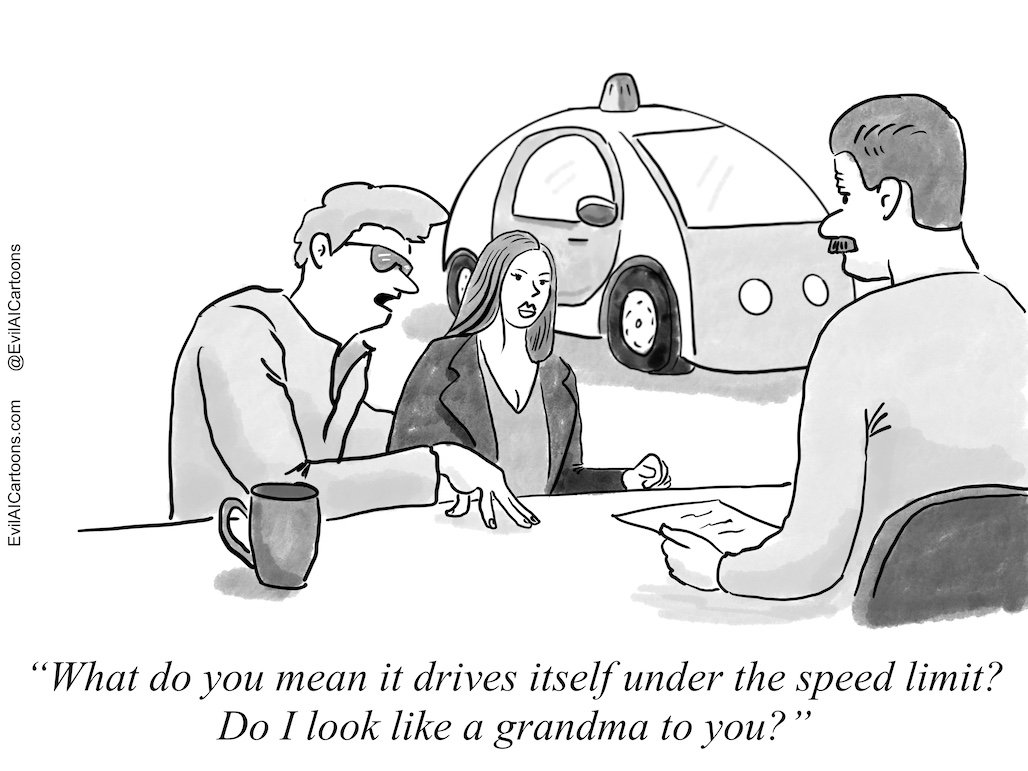

Back to the ethical dilemma, what if we proposed regulations that require all self-driving cars to minimize total injuries? This way, no one feels like a sucker for being the only one with the self-sacrificing car, while everyone else enjoys their self-protecting vehicles. One rule for everyone. We asked our survey participants whether they would support such regulation. They did not. Moreover, people said they would not purchase self-driving if they were required to minimize harm, rather than always prioritizing the passenger. They would rather drive themselves. This outcome would be disastrous, especially if self-driving cars end up, as promised by their proponents, eliminating the vast majority of road accidents. My co-authors and I called this the ‘ethical opt-out problem’: foregoing the societal benefits of AI because consumers can opt-out of using it unless it implements the most favorable outcomes for themselves at the expense of others.

So we are in a pickle. If we legally mandate that all self-driving cars be pro-social (caring about the safety of the greatest number of people), we might delay their adoption by consumers, which may lead to more deaths in the interim. But if we give in to consumer demands to put their safety above all, we alienate vulnerable road users like pedestrians, and may end up with public backlash.

References

Hardin, G. Tragedy of the Commons. Science 162, 1243–1248 (1968).

Ostrom, E. Governing the Commons: The Evolution of Institutions for Collective Action. (Cambridge University Press, 1990).

Jean-François Bonnefon and Azim Shariff and Iyad Rahwan. The Moral Psychology of AI and the Ethical Opt-Out Problem. in Ethics of Artificial Intelligence (ed. Matthew Liao, S.) (Oxford University Press, 2020).